Description

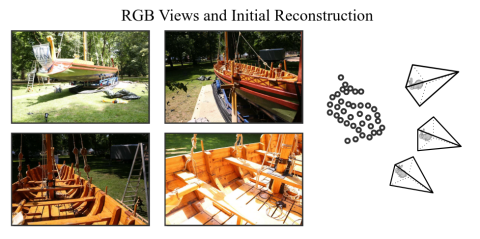

Surface reconstruction from 3D scans, e.g., quick object sensing from consumer devices, often has low detail quality, due to the low resolution of the depth images. On the other hand, RGB images captured simultaneously by the camera have ~100 times higher resolution. We want to use the coarse geometry as a scaffold to fill in detail by triangulating the RGB images. ADOP [1] solves a similar problem but requires 4 hours for training. Can a purely geometric method approximate this in real-time?

Tasks

- integrate depth images from a sensor (time-of-flight or LIDAR) that also has an RGB camera (iPad, or similar tablet/smartphone) using a suitable open source SLAM framework, e.g., OKVIS2-X [2], and store the corresponding RGB images, reprojected to the sensor's viewpoint

- compute 3d connectivity and visibility from the depth images to form the coarse geometry scaffold

- project the RGB images onto this scaffold and optimize fine in-between geometry for a common view with images from the other view points using gradient descent (inspired by 3D Gaussian splats but without learning)

- evaluate the quality/runtime tradeoff compared to slow, high-quality methods such as ADOP [1], plus time coherency with continuous scan updates

[1] ADOP: Approximate Differentiable One-Pixel Point Rendering: https://arxiv.org/abs/2110.06635

[2] https://arxiv.org/html/2510.04612v1

Image credit: [1]

Requirements

- Knowledge of C++

- Knowledge of English language (source code comments and final report should be in English)

- Bonus for experience with CUDA or geometry processing

Environment

Standalone C++/CUDA application (platform-independent, tested on Linux, Windows)

A bonus of €500/€1000 if completed to satisfaction within an agreed time-frame of 6/12 months (PR/BA or DA)